Data Triage with SAS

Getting my papers written for the 2011 Western Users of SAS Software (WUSS) meeting . All three papers are in the SAS Essentials strand, which is designed to provide a jump start in their career for relatively new programmers. I’ve given some version of this talk a few times before, and I sometimes think it is pretty basic. I kind of want to do cool stuff with macros and parallel analysis criterion and multiple imputation, and like the ads for barbells in the back of the comic book for the ninety-three pound weaklings say, “Impress your friends!” (Or are those the Viagra ads? I get confused.)

Getting my papers written for the 2011 Western Users of SAS Software (WUSS) meeting . All three papers are in the SAS Essentials strand, which is designed to provide a jump start in their career for relatively new programmers. I’ve given some version of this talk a few times before, and I sometimes think it is pretty basic. I kind of want to do cool stuff with macros and parallel analysis criterion and multiple imputation, and like the ads for barbells in the back of the comic book for the ninety-three pound weaklings say, “Impress your friends!” (Or are those the Viagra ads? I get confused.)

Then, I think of a very wise comment my former business partner and co-founder of Spirit Lake Consulting, Inc. once made to a new Ph.D. we had hired, he said,

I know the type of low bandwidth, low tech site we have is not going to impress your colleagues at the university you just graduated from, but guess what, we’re not in business to impress those people. We’re in business to serve a certain audience and this is what meets that audience’s needs.

I remember Erich’s advice and think about the intended audience. These sessions are billed as suitable for novice programmers. I remember the first SAS conference I attended – SUGI 10, in Reno, NV. There weren’t a lot of engineers who were single mothers of preschoolers back then. I called ahead and reserved a babysitter at the hotel. She took Maria to check out the elephants at the Golden Nugget, where the conference was held. Maria now covers the social media beat for ESPN. It was a long time ago. I still remember, though, wandering around and trying to find something that was not impossibly over my head.

So …. I think of me, Maria and the elephants as I get ready for each talk and wonder, “Is this really too basic?’ .

In case you’re dying to know, Part 2 of my SAS Essentials talk is on Data Triage and this is what I have to say.

Triage, as both Merriam-Webster dictionary and any medical professional, of which I am not one, knows is “a : the sorting of and allocation of treatment to patients and especially battle and disaster victims according to a system of priorities designed to maximize the number of survivors”.

I call these steps data triage because even if you don’t have time to do anything else, do this.

Learn to stare at your data. I’m serious. If there’s ever a meeting where the results are completely wrong because of some fundamental flaw in the data, when the client is pointing his finger and screaming,

Learn to stare at your data. I’m serious. If there’s ever a meeting where the results are completely wrong because of some fundamental flaw in the data, when the client is pointing his finger and screaming,

“Didn’t you even LOOK at these data?”

you don’t want to be the one at the end of that pointing finger.

Finding and fixing data disasters is a two-step process. You need to do both of these steps but if you don’t do the first, which I refer to as “data triage”, I just might come to your house and personally give you a good talking to. The first step uses PRINT, MEANS and FREQ procedures to quickly identify any glaringly obvious problems with your data. Here’s what to do and why you do it.

Print out the first 10 records.

PROC PRINT DATA = lib.g8_student07 (OBS = 10 ) ;

Don’t forget the OBS = 10 ! You don’t want to print out a dataset of 357,000 records! If something is completely off, it should show up in the first 10 records. You may be thinking that you should print more than 10, but remember, many of these datasets have 500 or more variables, and there are more efficient ways to analyze your data than looking at >5,000 numbers. At this point, you are just looking for glaring errors, like values entered for gender are “dog”, “tiger” and “pickle”. The most likely error you’ll spot here is that at some point in the INPUT statement an error was made and now all of the data are off by one column.

PROC MEANS DATA = lib.g8_student07 ;

At this point, you’re only looking for one thing and that is if values are out of range, for example, the items are scored on a 1 – 5 scale and the maximum of many of the variables is 8. Occasionally for perfectly good reasons other than to annoy you, people code data as 8, 99 or whatever to show that it was “not administered”, “not answered” , “did not know” and so on. There are some interesting analyses that can be done of patterns of missing data, but now is not the time to do them. If you want to use those missing value codes later, good for you, but given how many times these have caused completely incorrect results, you probably want to set these out-of-range values to missing in your analytic file. If you do need an analysis on non-respondents by type, you can always go back to the raw data file and read it in with the missing codes.

PROC FREQ DATA = lib.g8_student07 NOPRINT ;

TABLES idvar / OUT = studentfreq (WHERE = ( COUNT > 1 )) ;

FORMAT idvar ;

This is the step where you identify duplicate ID values. In almost every circumstance, you are going to have a unique identifier that is supposed to be, well, unique. This can be a social security number, transaction ID, employee number, whatever. Be sure you don’t forget the NOPRINT option !!! If you happen to leave off the WHERE clause in the next statement or make some other error, the last thing you want is a frequency table of the 2,000,000 user ids printed out. The frequencies are going to be output to a file named studentfreq. Only values where the count is greater than 1 will be written to that dataset. Also, note that there are two sets of parentheses in the WHERE clause.

I want to force it to use the unformatted value, so included a FORMAT statement with the variable name, followed by no format whatsoever.

The next step prints the first ten duplicate ID numbers.

PROC PRINT DATA = studentfreq (OBS = 10 ) ;

I used the obs = 10 option because just in case I used the wrong variable, or I forgot the count > 1 or accidentally typed count = 1 or one of a bunch of different reasons I might have gotten 2,000,000 records in this dataset, I don’t want them all printing out.

If you are working with large data sets on a server, you may go over the disk quota for your account with errors like this, certainly if you are using SAS interactively you will run into problems.

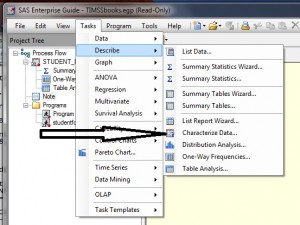

There is a second option for getting a first look at your data, and that’s using the CHARACTERIZE DATA task in SAS Enterprise Guide. You can find it under TASKS > DESCRIBE > CHARACTERIZE DATA

This won’t give you the exact same results as above. What it will give you is a frequency distribution of the 30 most common values for each categorical variable and summary statistics (N, number missing, mean, minimum, maximum and median) for each numeric variable.

This won’t give you the exact same results as above. What it will give you is a frequency distribution of the 30 most common values for each categorical variable and summary statistics (N, number missing, mean, minimum, maximum and median) for each numeric variable.

It also can give you graphs of each variable’s distribution and save datasets of the descriptive statistics and frequencies. I don’t usually do that. With the size of datasets I use with hundreds of variables, the graphs take a lot of time and don’t provide much useful information.

The datasets can be useful. For example, I could open up the descriptive statistics dataset and sort by the number of missing observations to see which variables had a high percentage of missing data.

That, however, is getting to step two, which is more detailed analysis of your data quality.

Even though these steps are basic, I have a reason for emphasizing them year after year, and it is the exact same reason that I drilled my daughters on their multiplication tables. Over-learning. Things you must know you need to have repeated until you will never forget. I haven’t been in second grade for many decades and yet I still know that 12*12 = 144. These steps are that important. If you doubt me, just imagine that screaming client and his jabbing finger.

Tomorrow I may write about step 2 of data quality. Or maybe I’ll write about Part 1 of my talk. (Aren’t you even a little curious what comes before this?)

Or maybe I won’t do any of that, because Maria just flew in from Boston with my granddaughter who, coincidentally, is the exact same age Maria was when I attended that first SAS conference. I told you it was a long time ago.

One Comment